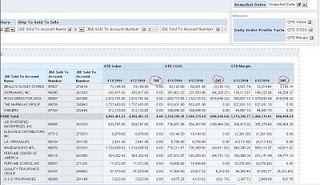

I found this worth blogging for because it is fairly common that people want to put a default value on date dashboard prompts as current date or yesterday or any other dates that changes dynamically. So in this blog, I will just focus on setting current date as default in the date dashboard prompt.

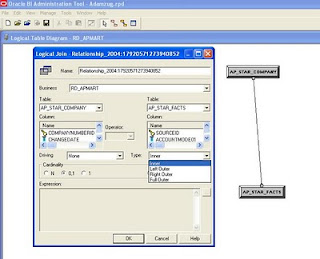

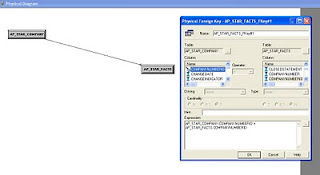

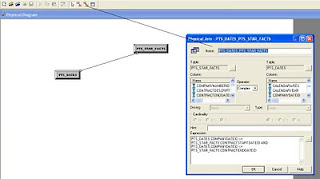

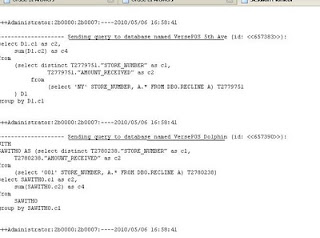

Unlike column filters where we can enter 'current_date' in the SQL expression of the 'Add' tab of the filter property, we can't really do the same in dashboard prompt property. Therefore, the first way of doing it will be creating dynamic variable in Admin tool with a query statement like 'select sysdate from dual' in the initialization block, and call this dynamic variable in the dashboard prompt property (Go to prompt property ---Default to--- Server variable--- enter dynamic variable name).

I highly recommend creating this current date variable in every OBIEE project because it can be beneficial to a lot of people in a lot of areas with similar needs. However, creating this dynamic variable will requirement some level of configurations in the rpd, which will need time to have it ready to use in the presentation service. Let's say some users want to set current date as default in one of the dashboard prompt and it must be done asap, we can't just go configure the dynamic variable if we are talking about making changes in live production.

Therefore, we have another option.

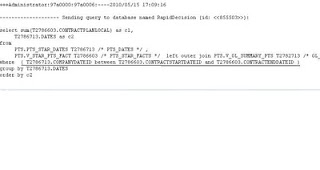

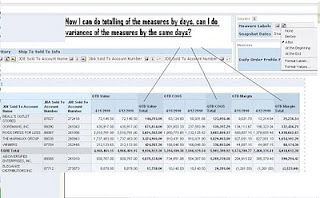

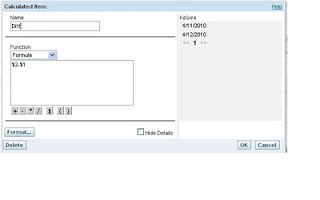

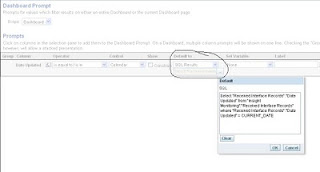

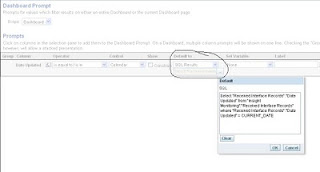

In prompt property, go to 'default to' drop down and select 'SQL results'

In the SQL result, enter this select statement (for my case): Select "Received Interface Records"."Date Updated" from "Insight Monitoring"."Received Interface Records" where "Received Interface Records"."Date Updated" = CURRENT_DATE

The table name and column name of this statement should be the same as displayed in column formula content, this is not where you expect to enter the physical SQL for database, so don't get confused with the statement you would enter dynamic initialization string.

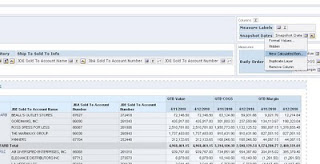

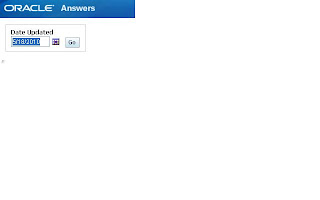

After that, let's preview this prompt:

This method will give you instant result, however, some level of technical skill is needed. So use it depending on the expectation of your project and what level of training the business users have.

til next time

Unlike column filters where we can enter 'current_date' in the SQL expression of the 'Add' tab of the filter property, we can't really do the same in dashboard prompt property. Therefore, the first way of doing it will be creating dynamic variable in Admin tool with a query statement like 'select sysdate from dual' in the initialization block, and call this dynamic variable in the dashboard prompt property (Go to prompt property ---Default to--- Server variable--- enter dynamic variable name).

I highly recommend creating this current date variable in every OBIEE project because it can be beneficial to a lot of people in a lot of areas with similar needs. However, creating this dynamic variable will requirement some level of configurations in the rpd, which will need time to have it ready to use in the presentation service. Let's say some users want to set current date as default in one of the dashboard prompt and it must be done asap, we can't just go configure the dynamic variable if we are talking about making changes in live production.

Therefore, we have another option.

In prompt property, go to 'default to' drop down and select 'SQL results'

In the SQL result, enter this select statement (for my case): Select "Received Interface Records"."Date Updated" from "Insight Monitoring"."Received Interface Records" where "Received Interface Records"."Date Updated" = CURRENT_DATE

The table name and column name of this statement should be the same as displayed in column formula content, this is not where you expect to enter the physical SQL for database, so don't get confused with the statement you would enter dynamic initialization string.

After that, let's preview this prompt:

This method will give you instant result, however, some level of technical skill is needed. So use it depending on the expectation of your project and what level of training the business users have.

til next time